The US group CrowdStrike reportedly caused a problem with Microsoft’s operating system resulting in disruption around the world

US software provider CrowdStrike is being blamed for a security software update which impacted Microsoft’s Windows operating system which caused disruption around the world. CrowdStrike’s software is in common use by businesses all over the globe to help secure Windows PCs and servers.

CrowdStrike has complained publicly about Microsoft for series of attacks it has had to contend with in recent months. Earlier this year it launched a package intended to work in parallel with Microsoft’s anti-virus Defender product.

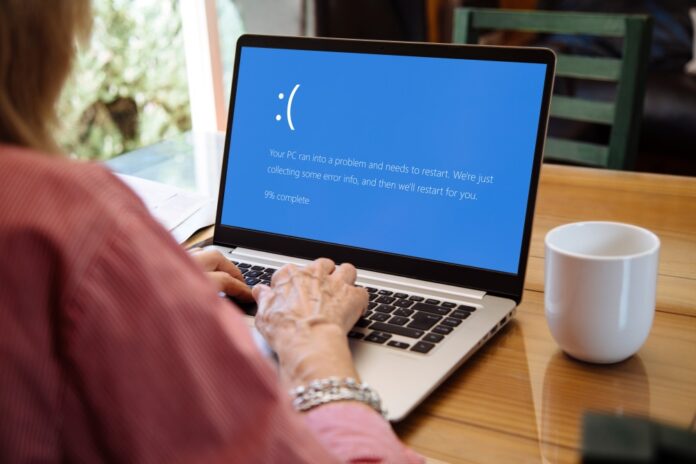

The Verge reported, “Thousands of Windows machines are experiencing a Blue Screen of Death (BSOD) issue at boot today, impacting banks, airlines, TV broadcasters, supermarkets, and many more businesses worldwide. A faulty update from cybersecurity provider CrowdStrike is knocking affected PCs and servers offline, forcing them into a recovery boot loop so machines can’t start properly.”

Although some services have been restored, many have not. The outage affected companies in countries from Australia to the UK, and caused problems for Azure customers and in aviation, causing delays at check-ins and flights to be grounded in Europe and the US. It has impacted banks and broadcaster Sky in the UK, which is off air, the London Stock Exchange and retailers Woolworth’s and 7Eleven in Australia, and rail services, such as the UK’s Southern Rail. Many workers cannot not access the Microsoft 365 suite of applications.

UPDATE: It is now known that a single software update by CrowdStrike caused a IT meltdown in countries that rely heavily on Microsoft. CrowdStrike’s CEO said he was “very sorry”. This does nothing to repair the harm and cost caused by the outage from millions of people stranded in airports or travelling by rail, to those who missed medical appointments or suffered commercially or individually because of failed financial transactions, or media companies’ loss of advertising income because they could not get on air.

Not to mention the second wave of damage perpetuated criminals who will waste no time in making the most of the opportunities presented.

Perhaps the most frightening thing of all is the general attitude seems to be a shrug of shoulders and an acknowledgement that this is the shape of things to come. So it’s probably won’t be the “biggest IT outage ever” for very long.

Let’s just step back and consider. A single software update by one company collapsed half the internet. That it was a glorious demonstration of what could be wrought for hostile states, how have we ended up in this situation? The internet was designed to withstand nuclear war but not human error massively compounded by automation.

In February this year, Colin Bannon, CTO of BT Global made some acute comments about the dangers of automation in this FutureNet World interview, saying, “Most of the big outages in the last year have been caused human error then automation magnified the blast radius, pushing something that was wrong to every box”. In one instance, this included disabling the code reader on the highly secure door to a data centre that had to be accessed to fix the issue.

Bannon says, “That’s why an outage can take 12 hours now, because even if they know what the problem is, they don’t have that remediation.” Which indeed is the case with CrowdStrike and the fallout from the outage is likely to be weeks if not months before it is totally fixed.

Bannon also states that BT’s way of avoiding such disasters is thinking “about the old school techniques of diversity, not just resilience” which he outlines in the article here. Today UK regulator Ofcom said it has fined BT £17.5 million for being “ill-prepared to respond to a catastrophic failure of its emergency call handling service” last year. During the incident, nearly 14,000 call attempts – from 12,392 different callers – were unsuccessful.

Solutions for telco network resilience might not be the same as for IT, but both need to put resilience at the top of their agendas.

This is an updated version of the article published on Friday 19 July.