Testing throughput to ensure high speed mobile broadband services

This article will review the technical issues related to throughput on an LTE network, from both base station and handset sides, and then discuss the techniques being introduced in the industry to measure throughput. Finally, it will analyse some typical results of throughput testing to explain how this relates to the design of LTE base-stations and handsets. It has been written by Jonathan Borrill, Director of Marketing Strategy, Anritsu.

The mobile industry is now heavily focussed into two key areas of development and innovation, firstly in applications and services driven by innovative new product technologies such as touch screen and user friendly operating systems, and secondly in providing affordable but attractive subscription packages to encourage use of these innovations. Behind the success of this lies the need to provide users with a high speed data/browsing experience that enables easy use of the services, but with cost efficient networks and technologies. To enable this, there are new technologies in LTE to achieve very high data rates and efficient use of radio spectrum such as OFDMA and Resource Scheduling. A key testing technology behind this is the measurement of “throughput” on a device to measure the actual data rates achieved in certain conditions in the network.

The mobile communications industry has been moving rapidly into the area of mobile data services over the last 10 years, with the introduction of GPRS technology to GSM networks to try and provide a more efficient data service, and the with the introduction of HSPA into the 3G networks. The objective of both these technologies is to provide a more efficient use of radio resources (radio capacity) to enable more users to access data services from a single base-station, and provide them with higher data rates for download/upload of data/content. With both of these technologies, the limitation on data rates had been the air interface, as the backhaul connection to the base-station provided a much higher data rate (e.g. 2-10 Mb/s). With the introduction of LTE, where a single base station sector can provide 100-150Mb/s download capacity, then the key issues are now to provide enough backhaul capacity to the site to support the data capacity of the site, and then to have appropriate control mechanisms in the base station to share out this capacity to the users connected in the cell according to their data rate demands and the quality of the radio link to each user.

End to End network innovation

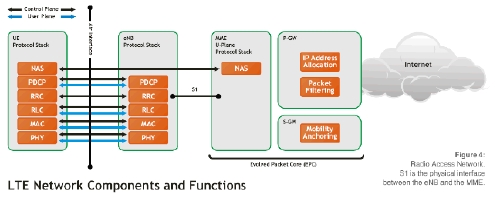

The challenge of providing backhaul capacity has been addressed by a change in network architecture and technology in the move to LTE. The LTE network architecture is changed so that the bas-station is now capable of many more decisions concerning the provision of services to users, and it only needs to receive the actual IP data packets for a user and it can manage the delivery locally. This is different from 2G/3G networks where the MSC or RNC was responsible for this decision making, and then provided the base station with data already formatted in network specific protocols for delivery to the user. The backhaul links to the base station have also been changed from ATM fixed capacity links used in 3G to “All-IP” based signalling for connecting to LTE base-stations. This allows Operators to use existing IP network infra-structure to provide convenient and high data rate links to base stations on the S1 interface. This trend had already started in 3G with the introduction of Ethernet based Iub links for handling HSPA data.

Base station scheduler as key controller of radio resources

In LTE networks, the air interface technology has been further evolved from HSPA technology used in 3G networks. The access technology has changed from WCDMA (code based access scheme) to OFDMA (frequency/time block based access scheme). This gives greater spectral efficiency (higher data capacity in a given amount of bandwidth), and greater capacity to manage more users and share the radio link capacity between them. The key element in this new scheme is the “scheduler” in the base station. This has the role of deciding how/when each packet of data is sent to each user, and then checking that the delivery is successful or re-transmitting again if not successful.

L1 performance vs L3/PDCP throughput

The headline performance of LTE networks has been specified (and advertised) as 100Mb/s, so it is important to understand what this number means. This is actually the capacity of the base station cell to transmit over the radio link (called the Layer 1). This means that the capacity of 100Mb/s is shared across all users on the base station according to different parameters. So, in theory the base station can allocate all of the available capacity to 1 user so they have 100Mb/s, or allocate different portions of the 100Mb/s to several users on the network. This ‘sharing’ is made every 1mS, so the capacity given to each user is changed every 1mS. However, this is the radio link capacity being shared, and this includes not only data transmitted to each user, but also re-transmissions of previous data packets that were not successfully received by users. So, if the base station scheduler tries to allocate to high a data rate to users (by using higher rate modulation and coding schemes), then in fact more of the capacity can be used up in re-transmitting this data again at a lower data rate. Thus there is a balancing act between sending data at highest rate possible (to maximise the use of available radio resources), and then sending at too high rate that more capacity is used in re-transmission. In the situation where there are high re-transmission rates, then although the air interface is still being used at maximum capacity the users will experience a much lower data rate (the Layer 3 / PDCP data rate). In this case (of choosing first a high data rate and then re-transmitting at a lower rate), the users experience of data rate would actually be lower than if a lower data rate were selected to start with. Layer 3 is the higher layer in the protocol stack, and represents the actual data rate achieved by the radio link as seen by an external application. The external applications connect to the Layer 3 of the protocol stack via a function called PDCP (Packet Data Convergence Protocol) that enables IP data to link into LTE protocol stacks.

Importance of UE measurement reports and UE decoding capability

A key parameter for the base station scheduler when selecting the data rate for each user is the “UE measurement reports” sent back from each user to the base station. These reports are measurements of critical radio link quality parameters to enable the base station to select the best data rate for each user. LTE also uses a HARQ (Hybrid Acknowledge Request) process to enable the UE to acknowledge the correct reception of each data packet. In this process, each data packet is sent from the base station, and then there is a wait for a positive acknowledgement of correct reception. If the response is negative (incorrect reception) or not acknowledged, then the packet is then re-scheduled for a repeat attempt to send, now using a lower data rate that is more likely to succeed.

Application of UE throughput testing

We can see from the previous section, that the UE’s ability to correctly receive each packet of data, together with the ability to provide accurate measurement of the radio propagation/reception characteristics, is critical to the data throughput performance of an LTE network. Where a UE is making inaccurate measurement reports, then the base station will send a larger amount of data at a rate that is too high for the UE, and will then be forced to re-transmit this at a lower data rate. Where the UE receiver has a poor implementation, then it will not be able to decode data sent to it by the base station when it has been calculated that the chosen data rate should be suitable. Again, the base station will be forced to re-transmit at a lower data rate. Both of these phenomenon will have the effect of making the users perceived data rate for all users on the network as lower than the expected 100Mb/s. To ensure that this does not happen, there are now a set of UE throughput test and test equipment available to measure and confirm that a particular UE implementation is performing to the level expected by the base station.

The test environments available are based on both the 3GPP Conformance Test Specifications (TS36.521) to ensure quality meets the minimum requirement, and on R&D tools developed for deeper analysis and de-bug of possible errors in UE implementation. Both systems are built around using a System Simulator ‘SS’ to simulate and control/configure the LTE network and a fading simulator to control/configure the radio link quality between the UE and the network. Using this architecture, it is possible to but the UE into a set of standard reference tests to benchmark any UE against the 3GPP standards and also against other UE implementations. As this is based on simulator technology, it is possible to create precise and repeatable conditions for testing that can not be guaranteed in live network testing. So this technology forms the basis for comparative testing and benchmarking of UE’s and is used by many network operators to evaluate performance of UE suppliers.

Utilising the controllable nature of simulator testing, the UE developers also use the technology for deeper investigation of UE performance. As they are able to carefully select and control each parameter of the network and radio link, specific issues can be deeply investigated and then performance improvements and fixes can be accurately and quantifiably measured to confirm correct operation. A key aspect to this testing concept is the ability of the test engineer and designer to see both the Layer 1 (radio link layer) throughput and the Layer 3/PDCP (actual user data) throughput. This enables testers and designers to better understand how much throughput capacity is being used for re-transmission of incorrectly received data versus actual user data.

Looking at typical results for testing we see that we can provide pass/fail results from the Conformance Test specifications, which provide the baseline for compliance to 3GPP and basic performance. As we go deeper into the testing, we then concentrate on evaluating the throughput at Layer1 and Layer3 separately, and the ratio between these. For measuring the throughput we measure the number of PDU’s (Packet Data Units) transmitted and the size/configuration of these PDU’s. The Layer1 performance is measured as MAC PDU’s, and the Layer3 throughput as PDCP PDU’s, We also need to monitor the UE reports that show the measured signal strength (RSRQ), data reception quality (CGI) and acknowledgement of correct data received (ACK/NACK). These reports are used by the base station to select the optimum format to transmit the next packet of data. In addition, where MIMO is being used, there are 2 additional reports from the UE to assist the base station in selecting the optimum MIMO pre-coding. These are the Precoding Matrix Indicator (PMI) and Rank Indicator (RI) that report the preferred MIMO matrix to be used for the current multipath environment and the number of separate MIMO data paths that are calculated in the UE.

As the propagation conditions between UE and base station are reduced, we should see the PDU throughput level reduced. At the same time, the UE should report lower RSRQ and CQI to indicate poorer link quality. It is therefore important to monitor the reports and characterise these across a range of propagation conditions. When these conditions are reduced, we should see the base station scheduler selecting lower data rates (modulation type and coding rate) as the response and hence see lower RRC PDU data rates. In an optimum implementation of both base station and UE then the MAC PDU rate should fall at the same rate. As the multipath conditions are reduced, and the cross correlation between different paths is increased, the MIMO based data rate improvement should reduce, also shown as a lower PDCP PDU rate.

Where the PDCP PDU rate is decreased more than MAC PDU rate, then we should be seeing failed data packet delivery and re-transmission. This is monitored via the ACK/NACK reports from the UE that will turn to more NACK status. As these re-transmissions represent a reduction in network capacity and reduction in user perceived data rate, we must aim to reduce these. Fault tracing is made through the above measurements, ensuring the UE is making correct reports of signal link characteristics and that the base station scheduler is selecting optimum modulation and coding scheme to suit the channel conditions.

Summary

LTE networks are designed for end to end IP packet data services, and the air interface is optimised to delivery of packet data streams with the most efficient use of radio resources. The mechanisms in the base station and UE create a feedback loop to optimise the selection of most suitable settings for transmitting each individual packet of data. These are based on reporting of channel conditions and adaptation of the OFDMA configuration to match this. Using a laboratory based test environment, which is accurate, controllable and repeatable, we can measure the throughput of an LTE link to see both the actual air interface data rates and also the user perceived data rate. This will also monitor the associated reports to confirm correct operation, baseline the performance of different implementations, and identify possible areas for further optimisation of a design.