The new GPU architecture, reportedly arriving in 2026, demonstratres Nvidia thinks model parameters will keep surging

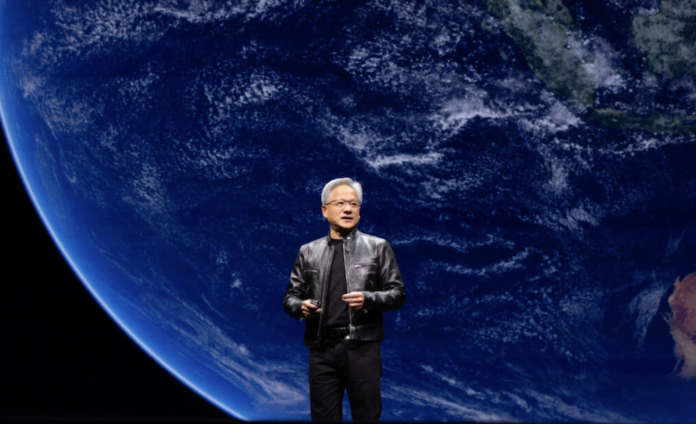

Data centre builders and operators have only just not got their heads around how to deal with 100+ kW racks in terms of heat and power but Nvidia CEO Jensen Huang raised the stake at Taiwan’s Computex 2024 event, announcing the new GPU architecture called Rubin during his keynote.

The Rubin GPU will feature 8 stacks of the newer HBM4 memory with a roadmap to Rubin Ultra that would eventually feature 12 HBM4 stacks. In addition, Nvidia plans to introduce a successor to its Grace Hopper and Grace Blackwell chips, taking the form of a new Vera Rubin board with the Veru CPU. The company didn’t reveal the key specs for the powerful new board but it would support the latest NVLink 6 switches which do offer speeds up to 1.8Tbps bidirectionally.

Vera Rubin was an American astronomer who pioneered work on galaxy rotation rates. She uncovered the discrepancy between the predicted and observed angular motion of galaxies by studying galactic rotation curves – and established the presence of dark matter.

Staying ahead of the pack

Nvidia is renowned for staying ahead of the pack and given its overwhelming marketshare, the only way is down if it doesn’t. The announcements effectively spell out its AI roadmap for the next few years but it also demonstrates that the plan is to make models bigger and bigger in the hope they will get better and better. This has big ramifications for future data centres in terms of physical size and power use.

It is important not to jump ahead too far given the next-generation Blackwell GPUs aren’t probably going to be with us until later this year when we’ll see the the B100 and B200 GPUs beginning to arrive. Huang then pointed out the Blackwell Ultra will be next – which will include 12 HBM3E compatibility. The roadmap will see Rubin architecture turning up in 2026 followed by an Ultra version a year or so later, all in line with Nvidia’s “one year rhythm” for data centre releases. Smaller chip makers will struggle to match that sort of pace.

As Dr Richard Windsor points out in his latest Radio Free Mobile blog, Blackwell can train the same model as Hopper on one-quarter the number of GPUs and one-quarter the power consumption. This will become a key factor when deploying clusters because power consumption one of the biggest costs in running a data centre.

Linear or exponential

Nvidia laid out its response to the launch of the Ultra Accelerator Link (UALink) advocacy group last week which is essentially trying to break the market leader’s inter-chip communication dominance with a new non NVLink standard. The company also covered multiple bases so unveiled its Ethernet roadmap at Computex. Huang unveiled plans for the annual release of Spectrum-X products to cater for the growing demand for high-performance Ethernet networking for AI. Its Spectrum X600 is designed to link 10s of thousands of GPUs with the X800 Ultra being designed to link 100s of thousands of GPUs and the X1600 to link millions of GPUs.

As Windsor points out, the AI industry intends to keep growing its model size to trillions and then tens of trillions of parameters in size, hoping for exponential performance improvements. However, there are signs the exponential parameter growth is delivering only linear improvements in performance. If this turns out to be true, the return on investment for training ever-larger models evaporates.

While this will contribute to the end of the AI bubble, the sheer performance of these architectures gives telcos an opportunity to be more radical at their network edges. However, watching the declining enterprise revenues across many telcos, this door is at risk of closing before many plant their foot in it.